The following is a news article by Chris Barncard, writing for the University of Wisconsin-Madison, posted August 26, 2013, and with the title above:

It is natural to imagine that the sense of sight takes in the world as it is — simply passing on what the eyes collect from light reflected by the objects around us. But the eyes do not work alone. What we see is a function not only of incoming visual information, but also how that information is interpreted in light of other visual experiences, and may even be influenced by language.

Words can play a powerful role in what we see, according to a study published this month by UW-Madison cognitive scientist and psychology professor Gary Lupyan and Emily Ward, a Yale University graduate student, in the journal Proceedings of the National Academy of Sciences. “Perceptual systems do the best they can with inherently ambiguous inputs by putting them in context of what we know, what we expect,” Lupyan says. “Studies like this are helping us show that language is a powerful tool for shaping perceptual systems, acting as a top-down signal to perceptual processes. In the case of vision, what we consciously perceive seems to be deeply shaped by our knowledge and expectations.”

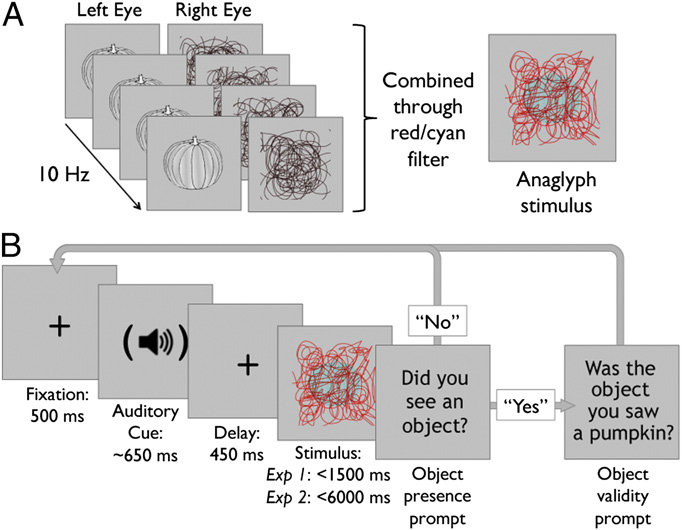

And those expectations can be altered with a single word. To show how deeply words can influence perception, Lupyan and Ward used a technique called continuous flash suppression to render a series of objects invisible for a group of volunteers. Each person was shown a picture of a familiar object — such as a chair, a pumpkin or a kangaroo — in one eye. At the same time, their other eye saw a series of flashing, “squiggly” lines. “Essentially, it’s visual noise,” Lupyan says. “Because the noise patterns are high-contrast and constantly moving, they dominate, and the input from the other eye is suppressed.”

Immediately before looking at the combination of the flashing lines and suppressed object, the study participants heard one of three things: the word for the suppressed object (“pumpkin,” when the object was a pumpkin), the word for a different object (“kangaroo,” when the object was actually a pumpkin), or just static. Then researchers asked the participants to indicate whether they saw something or not. When the word they heard matched the object that was being wiped out by the visual noise, the subjects were more likely to report that they did indeed see something than in cases where the wrong word or no word at all was paired with the image.

“If language affects performance on a test like this, it indicates that language is influencing vision at a pretty early stage. It’s getting really deep into the visual system.” “Hearing the word for the object that was being suppressed boosted that object into their vision,” Lupyan says. And hearing an unmatched word actually hurt study subjects’ chances of seeing an object.

“With the label, you’re expecting pumpkin-shaped things,” Lupyan says. “When you get a visual input consistent with that expectation, it boosts it into perception. When you get an incorrect label, it further suppresses that.” Experiments have shown that continuous flash suppression interrupts sight so thoroughly that there are no signals in the brain to suggest the invisible objects are perceived, even implicitly.

“Unless they can tell us they saw it, there’s nothing to suggest the brain was taking it in at all,” Lupyan says. “If language affects performance on a test like this, it indicates that language is influencing vision at a pretty early stage. It’s getting really deep into the visual system.”

The study demonstrates a deeper connection between language and simple sensory perception than previously thought, and one that makes Lupyan wonder about the extent of language’s power. The influence of language may extend to other senses as well. “A lot of previous work has focused on vision, and we have neglected to examine the role of knowledge and expectations on other modalities, especially smell and taste,” Lupyan says. “What I want to see is whether we can really alter threshold abilities,” he says. “Does expecting a particular taste for example, allow you to detect a substance at a lower concentration?”

If you’re drinking a glass of milk, but thinking about orange juice, he says, that may change the way you experience the milk. “There’s no point in figuring out what some objective taste is,” Lupyan says. “What’s important is whether the milk is spoiled or not. If you expect it to be orange juice, and it tastes like orange juice, it’s fine. But if you expected it to be milk, you’d think something was wrong.”